It seems that the recent error in the COVID infection stats may be down to the fact that they used MS Excel to record the infections:

https://www.mirror.co.uk/news/politics/16000-coronavirus-tests-went-missing-22794820

I'm completely ignorant of how one should handle big important datasets and even I am surprised that they were using Excel for such a job. I don't know what kind of software they should have been using though.

Any of the nerds on here know what they should have been using instead?

My academic twitter is suggesting R.

But how usable that is for everyone in the chain is probably a limiting factor.

I reckon some modification of sap would have been ideal.

would be ready to work amazingly sometime in 2022

They should have used a relational database. if they have access to MS Excel then they'll have MS Access which is a very basic DB - but they'd have been better with something more professional and scalable such as MS SQL server or an equivalent, given the size of the datasets they're talking about (if they almost "lost" 16000 records in one day)

you can **** things up in any package.

some understanding of the limitations of the tools you are using would have been a start.

Cardboard index cards.

sap

Sold a pup?

For something this critical, I'd be recommending something industry strength like Mircosoft SQL Server or Oracle Database / MySQL.

I agree with Jambo - excel is a riotously poor choice

can't understand why they aren't using something like an AWS or Azure hosted SQL database because that would happily handle the scale, the availability etc that they need and can integrate with whatever analytical tools (including the AWS / Azure native ones). Of course they have half-arsedely chucked a load of data sources together and collected the cash.

From what I've read (tweets so could be a load of cobblers) the data is stored in a DB and it's the export to PHE where excel is involved.

So need some decent ETL software - FME for the win 🙂

If ever the words 'should have been a database' were applicable it is here

Edit:

From what I’ve read (tweets so could be a load of cobblers) the data is stored in a DB and it’s the export to PHE where excel is involved.

Ah, I feel better already. Still not sure just why excel would have been used but not that surprised

What software (not excel) should Serco have used

I don't believe Serco actually had anything to do with handling the test data.

My academic twitter is suggesting R

So a bunch of people claiming their tool is better than the other one without either knowing how they screwed Excel up (we know if was a max rows issue, but not where in the data pipeline that appeared etc), or understanding that their tool (R) is not a system for storing data, but is one for munging it after you've got it (e.g. by consuming a s/sheet!). They *might* have better stats from R but its entirely possible to loose data in R too; its also not impossible that the stats people are using R to consume the data and that adds to the lack of visibility of the missing rows.

Cardboard index cards.

BoJo's little black book?

Lotus 123 or Quattro Pro obvs 🤪

I very much doubt they are using Excel for anything other than ad-hoc reporting.

There'll be some sort of Serco branded AWS-powered serverless cloud thing that it'll be built in. I would hope.

Isn't the whole point that trying to have one centralised database for the entire country was a completely stupid idea in the first place?

All the experts were telling them that it should be devolved to regional health authorities who could then use their local expertise in this field. So which software they chose to use wouldn't be an issue as they wouldn't be trying to manage an enormous unwieldy nationwide system

But then we've had enough of experts in this country, haven't we?

And its working out really, really well

"we know if was a max rows issue, but not where in the data pipeline that appeared etc"

Twitter also suggests it was a max column issue, so each record was stored/sent as a column not a row!

Pretty much any modern relational database could handle that, it's not a huge amount of data. The main point would be to get someone competent to build it, manage it and of course do back ups.

You'd also need a front end, but again that's hardly rocket science for a simple data entry system.

Once you've got the data into a database you can then use other tools (such as R) to analyse it.

Edit: seems it might have already been in a database, and the problem was with the incompetant data extraction using an Excel.

Twitter also suggests it was a max column issue, so each record was stored/sent as a column not a row!

This is my understanding. They used columns for cases, which is extremely strange.

Isn’t the whole point that trying to have one centralised database for the entire country was a completely stupid idea in the first place?

No because it isnt. Having a centralised db to have the data fed into when it is something relatively simple like this makes sense. Means you dont need to mess around sending test results to the right location and also allows easy peeping next door.

Using regional vs centralised track and trace is a separate discussion. Either can plug into the centralised test results.

can’t understand why they aren’t using something like an AWS or Azure hosted SQL database

Squeamishness (justified or not) about putting what is basically medical records on the Amazon cloud?

Twitter also suggests it was a max column issue

This is the strange thing...max COLUMNS not rows, how is it laid out? What's in each column?!

I can very much imagine someone high up saying they want the data in Excel, but they want it at the most detailed level so they can do whatever analysis they want on it. Which leads to someone having to export it from SQL, and that export being used for the 'sitreps' to DH/ministers which causes the problem, but it seems to be something else as it's affected the actual tracing.

can’t understand why they aren’t using something like an AWS or Azure hosted SQL database

AWS RDS is pretty nice if you just want something that works without having to think about it. I imagine Azure is similar.

This is my understanding. They used columns for cases, which is extremely strange

I find it hard to imagine the data being stored like that but easier to imagine an interface file being asked for in that format

Squeamishness (justified or not) about putting what is basically medical records on the Amazon cloud?

Given their habit of handing data over to the big providers I cant see that kicking in now. Plus they are being used already for some fairly sensitive stuff (well them or Azure/Google not sure which got what).

I find it hard to imagine the data being stored like that but easier to imagine an interface file being asked for in that format

Doesnt really make sense for the sort of data. A wide file is fine when you have fixed columns but generally far easier to work with a tall file when that is uncertain (or indeed when there is a shedload of them).

You guys are funny.

Exactly what Bails says, you can have the most fancy cloud whizz bang system you can think of, the very, very first thing people will start doing is extracting data into Excel so they can make pivot tables.

However in this case it sounds like they are rolling up the data into a reporting system (not Excel) but haven't managed to integrate all the disparate sources, so are using excel extracts as the source for upload. And it will have been a super daft "oh yeah, I didn't realise that" error, like not clearing down what should be a temporary table. We'll never know unless they decide to tell.

This isn't particularly a failure of the technology, it's a failure of validation.

I'm wondering if the op is the IT manager for Serco ?

So a bunch of people claiming their tool is better than the other one without either knowing how they screwed Excel up (we know if was a max rows issue, but not where in the data pipeline that appeared etc), or understanding that their tool (R) is not a system for storing data, but is one for munging it after you’ve got it (e.g. by consuming a s/sheet!). They *might* have better stats from R but its entirely possible to loose data in R too; its also not impossible that the stats people are using R to consume the data and that adds to the lack of visibility of the missing rows.

I think the fact that they ran into a max rows (or cols) issue just paints a picture of an inept government department way out of their depth unable to handle large volumes of data. But yes there probably are legality issues with large cloud computing platforms and NHS data.

At least people that know how to use R probably have *some* experience of dealing with datasets.

Isn’t the whole point that trying to have one centralised database for the entire country was a completely stupid idea in the first place?

I wouldn't say so. Make "region" an additional category/variable within a centralised database and you can separate regions. In my experience its much easier to extract data from a bigger dataset, than making two semi dependent datasets into one big database.

to OP, Excel is not a bad tool, but it seems they hadn't taken into account its limitations. Which is a flop at a very basic level.

The way it works with data:

1. You have your interface to interact with the program/enter unprocessed data.

2. Unprocessed Data analysis and (processed and unprocessed) organisation.

3. Data storage(processed and unprocessed).

4. Data exposition/interrogation (for example, how many infected per region).

Excel can do all of the above in one package, however it does not excel at any of them.

My academic twitter is suggesting R.

R and excel are not mutually exclusive. Whatever you do with R, the data has to be stored somewhere, this is where they may have made the mistake.

Exactly what Bails says, you can have the most fancy cloud whizz bang system you can think of, the very, very first thing people will start doing is extracting data into Excel so they can make pivot tables.

100% this, I'll never understand the current circlejerk elitism towards some of the basic MS tools.

I very much doubt they are using Excel for anything other than ad-hoc reporting.

It appears that excel sheet fed the wider track and trace? Or have I got it wrong? Why else is it being reported that tracing potential contacts was delayed a week?

I think as TiRed pointed out, with just a few thousand being tested earlier in the year, PHE opted for a quick and dirty solution in excel and never got around to adopting a more appropriate solution as the scope grew.

I think the fact that they ran into a max rows (or cols) issue just paints a picture of an inept government department

Inept government department or an inept outsourced set of experts?

Dont forget the failure by PHE/NHS at the beginning with the lighthouse labs turned out to be Deloitte and mates.

Chances are with the tories ideology of handing money to their mates the actual PHE staff have had to hand it to some consultant just out of uni who only knows powerpoint and excel.

And it will have been a super daft “oh yeah, I didn’t realise that” error, like not clearing down what should be a temporary table. We’ll never know unless they decide to tell.

The sheet ran out of rows is what happened and data that was supposed to be appended failed to be appended and nobody noticed for several days. This was spun as an IT error but it seems someone didn't understand that excel has a row limit. As someone who used excel before Office 2007, I was accurately aware of this because it used to be about 65 k max rows but was changed to 1 million rows. If you didn't use excel before Office 2007 you may never have run into this hard limitation.

Inept government department or an inept outsourced set of experts?

I suspect it's not either / or.

Maybe they didn't want to buy licences for the database to cover all users so extracted to Excel for sending to Track & Trace? Where I worked for 20 years we typed out timesheets into Excel and somebody retyped them into SAP.

A colleague who was ex-Royal Engineers ('sappers') reckoned his RE dictionary defined SAP as "To destroy by undermining"

This is quite a good explanation from the Guardian. It seems that some labs were sending in their results as a .csv to PHE who would import that data into excel. A .csv doesn't have a row limit but an excel sheet does, ergo any rows over a million will simply be culled during the import, and this wasn't spotted initially.

Presumably, the labs were just exporting all their tests to date each day into the .csv too. It all sounds terribly unsophisticated with a lot of redundant data handling and everything hinging on a dodgy excel sheet.

A non scalable system? IT delivered on the cheap (but part of an expensive government contact)? No one should be surprised.

.csv is a pretty decent way of exporting raw data, and easy to import into a db, as long as they are delivered consistantly, even if they aren't then there are tools that aren't excel that can edit them really, really, easily to put them into the format you need to import them into a proper database system, like MSSQL or ORACLE, which is exactly the kind of thing PHE should have been using from the start.

It's also possible that they were importing into Excel 2003 which has the much smaller row limit.

This made me laugh a lot:

I’m wondering if the op is the IT manager for Serco ?

Thanks for all the input folks. I had a suspicion that the view on my social media that 'EXCEL IS BAD' was misguided, even if its limits may have contributed towards this error.

I heard they were using 32bit excel so it just broke when they tried to exceed the number of available cells.

When watching the news on Saturday with Wife I said I bet this 'technical issue' is something really stupid like an excel spreadsheet running out of rows, so it was funny this morning when she shared this article with me.

The bottom line is the problem is with the people, not the technology, better people would put a better system in place or at least have the wherewithal to do a sanity check.

It wouldn't surprise me if they were using an xls file which has a 65,536 row limit, rather than xlsx which has 1,048,576 limit mentioned in the Guardian.

I guess there were three issues:

1) Involving manual processes like emailing around CSVs and manually compiling into database - rather than having automated and auditable processes.

2) Spreadsheet application being used to database, rather than a database application

3) Stupidity

Given the profile/level of investment and >6mths this has been going on for I wouldn't have thought it unreasonable for some kind of controlled system to be put in place e.g. with SQL database backend and frontends to interface with various users i.e. test centre upload results and enter summary information, administrators generate reports, test and tracers generate and manage cases etc.

Thanks for all the input folks. I had a suspicion that the view on my social media that ‘EXCEL IS BAD’ was misguided

The main things about Excel are:

Its on most peoples work machine.

Most people have some vague familiarity with it.

It is pretty capable especially when you throw VBA into the mix.

It is relatively easy to do a quick prototype in.

So when given a choice about gathering some proper requirements and then standing up a DB with a front end on it Excel often wins out as a shadow IT tool.

Given the profile/level of investment and >6mths this has been going on for I wouldn’t have thought it unreasonable for some kind of controlled system to be put in place e.g. with SQL database backend and frontends to interface with various users i.e. test centre upload results and enter summary information, administrators generate reports, test and tracers generate and manage cases etc.

I'm no IT expert but haven't there been a lot of problems rolling out such systems in the public sector?

I know for a fact that fire rescue has no central/national database and neither does town planning. All their data resides at the local level on a hotchpotch of paper and electronic records. It also make FOI requests problematic because they can claim compiling the data imposes an unreasonable cost.

That Guardian link doesn't make a whole lot of sense, difficult to say where the stupidity was introduced...

I can understand why it's still using a cobbled-together system relying on CSVs and some sort of fudged back-end though, government IT stuff just doesn't work at the sort of pace required for this sort of thing (outside of some specialist departments but I guess they weren't engaged). If Serco created the process and back-end then they'd still likely have been tied into a formal proposal, budget, design and change implementation approval process, that can take months even for relatively small projects (I work for an external IT provider to a government agency, not Serco though :p ).

It wouldn’t surprise me if they were using an xls file which has a 65,536 row limit, rather than xlsx which has 1,048,576 limit mentioned in the Guardian.

... and a 255 column limit IIRC. If they were outputting data in a cross tab-esque format, one day per column and started the spreadsheet back on Jan 23rd it would have shat itself two days ago.

Not unusual to dump data to the old format either.

There are default choices in the IT world. Data goes in a database, like MySQL, Oracle, MS SQL or IBM DB2. Unless there is a special requirement to do something else, use one of these.

Given the profile/level of investment and >6mths this has been going on for I wouldn’t have thought it unreasonable for some kind of controlled system to be put in place e.g. with SQL database backend and frontends

I’m no IT expert but haven’t there been a lot of problems rolling out such systems in the public sector?

There have, but this is not because of the technology. It's because of the way that govt departments approach IT, and the way IT suppliers approach govt departments. The govt depts say 'You are going to give us exactly this' and the IT suppliers say 'ok! No problem!' then they realise that 'this ' is full of holes, and they either plough on regardless saying 'well that's what you asked for' or 'but this isn't going to work' and the dept says 'don't try and get smart with us that's what we want' etc etc. It's a complete balls up, usually. Sometimes the supplier's fault, sometimes the dept's, sometimes both, sometimes the issue is external. Sometimes the supplier's salespeople say 'yes' to everything then the techies say 'but you haven't thought of this and that' and then it all falls to bits. See the youtube video 'The Expert' for a very very accurate example of this.

Any properly validated GDP compliant computer system would have been a ****ing start.

Or even a ****ing SOP with the line limit defined in it.

Pretty sure Azure have a GxP compliant package or guidelines on how to implement a GxP validated package.

I submit data from our laboratory to PHE on a regular basis, not covid related.

Our lab system runs an SQL which dumps a load of data into Excel. I "clean" the data, convert to CSV then upload to the PHE portal. This is a system they've had in place for years so I assume they've just modified it to accept covid related stuff from the lighthouse labs.

It's worth remembering that although there's a lot of good lab technicians out there, there's a lot of experts, there's a lot of knowledge and there's a lot of people grafting, we're all just plebs (well, some of us!) I'm my lab's IT rep, so things like data uploads are my responsibility. I know nothing about computers! No formal training, just do it because the previous guy retired and it fell to me. It's no surprise to me that something like this should go tits up.

I know nothing about computers! No formal training, just do it because the previous guy retired and it fell to me. It’s no surprise to me that something like this should go tits up.

If you guys were audited by the MHRA you'd get murdered to death. I would love to be the auditor doing the rounds around your lab.

WHOOOOOOOOOOOOOOOOOOOOOOOOOOOO! We're all going to die.

I’m no IT expert but haven’t there been a lot of problems rolling out such systems in the public sector?

I know for a fact that fire rescue has no central/national database and neither does town planning. All their data resides at the local level on a hotchpotch of paper and electronic records. It also make FOI requests problematic because they can claim compiling the data imposes an unreasonable cost.

On town planning, I would suspect its a way for local authorities to hold more power. On fire rescue I have no idea. A challenge that such systems would face is dealing with hystorical standards of how data was compiled and stored, that could be a massive ball-ache. I don't think this applies in this case, since I assume they are being sent consistent .csv files. Given the time and money, this blunder seems completely unreasonable.

If Serco created the process and back-end then they’d still likely have been tied into a formal proposal, budget, design and change implementation approval process, that can take months even for relatively small projects (I work for an external IT provider to a government agency, not Serco though :p ).

I'd like to know what ****ing experience Serco has of maintaining GxP compliant databases.

Our lab system runs an SQL which dumps a load of data into Excel. I “clean” the data, convert to CSV then upload to the PHE portal. This is a system they’ve had in place for years so I assume they’ve just modified it to accept covid related stuff from the lighthouse labs.

Pretty common exchange mechanism, we use it a lot in Telecoms. Our system has dB type a, their system has dB type b. Simplest export / import is a CSV file. I write a script to dump out the data as csv and ssh it onto their Oracle server and they write a script to suck it in from csv.

@molgrips serco isn’t a gov dept and not all gov depts operate in the way you describe. GDS for all it’s failures is at least facilitating better ways of working.

Contrasting this debacle is what HMRC doing. A mate of mine is the PO on the team that stood up the eat out to help out systems (register, find a participating restaurant, and claim) in 3 2 week sprints.

Parts of the civil service with the aid of switched on suppliers and contractors do have the skills and experience to do thus sort of thing properly; serco don’t.

Simplest export / import is a CSV file.

That’s completely normal. It’s the “load it into excel and get a human to clean it up”, which is the broken link in the chain.

Our lab system runs an SQL which dumps a load of data into Excel. I “clean” the data, convert to CSV then upload to the PHE portal.

I hope you use control totals and sanity check the exported data. Excel can have a mind of its own, even with .csv

Was reading an article earlier today about it. My first thought was 1 million rows in Excel. I'm sure it used to be much smaller than that. But years ago we ran into a similar issue when someone used Excel to open up a big CSV file. Think it was a SNOMED dataset or similar. Simple way round it was to create an SSIS package to import it into SQL server.

Sounds like they've struggled to homogenise a series of disparate data sources and haven't thought about volumetrics.

TBH I've never been able to fathom why the row limit on Excel is so high. I know it's the de-facto tool to use for any sort of data and usually used incorrectly. But a limit of say 10,000 rows would be a good artificial limit to force people to put data into a more appropriate system.

Our lab system runs an SQL which dumps a load of data into Excel. I “clean” the data, convert to CSV then upload to the PHE portal. This is a system they’ve had in place for years so I assume they’ve just modified it to accept covid related stuff from the lighthouse labs.

Get SQL to output it as a CSV file rather than an Excel and you having to clean it. Should be able to use a simple view or such like to clean the data for you in Excel.

⌨⌨⌨⌨⌨⌨⌨

🐒🐒🐒🐒🐒🐒🐒

Thought I had a solution, but my monkeys won't align with the keyboards when scaled up 🙁

A mate of mine is the PO on the team that stood up the eat out to help out systems (register, find a participating restaurant, and claim) in 3 2 week sprints.

You're right, and the last two gigs I went on were run like this so things are changing. Thank god.

Get SQL to output it as a CSV file rather than an Excel and you having to clean it. Should be able to use a simple view or such like to clean the data for you in Excel.

And here is the problem. Who is going to do that? Is there access to the system to do that? Is the vendor that wrote/installed it able to make these changes (their guy quit years ago and they don't make enough money to hire a new one)? Etc. Etc.

Multiply by all the labs out there and .csv it is.

I had an issue recently importing data into SQL Server (SSIS), the import would work but depending on the size of the file it would fail so under X mb it would work, it turned out to be a permission that had to be granted to the default user internet files directory, my understanding is the size of the file that would be accepted was down to how much memory the server had, so would be different in another server with more or less memory!

This was my first thought when I heard it was to do with the size of an Excel file lol.

I find the biggest issue with Excel, especially if macros are involved, is if somebody is on a different version. Defunct formulae ob newer versions or limited number of rows in older can throw a right spanner in the works.

I find the biggest issue with Excel, especially if macros are involved, is if somebody is on a different version. Defunct formulae ob newer versions or limited number of rows in older can throw a right spanner in the works.

I have a VM with extra OS build / Office combo to test for such things. Still doesn't catch everything - Anti Virus apps hate VBA, diff sys Admins lock down machines in different ways blocking access to various directories or moving default locations around. Always some machine it won't work on. Russian users with Cyrillic alphabets were a PITA to support.

Why does the data need cleaning in the first place? Surely data validation at input should remove that need. The fact they are using excel to move patient identifiable data around is ridiculous and provides an other good reason not to give them any personal data

This is ‘chickens coming home to roost’ for the DHSC/NHSE/I/NHSDigital etc etc. Excel is the tool of choice for them and poor NHS Trusts are driven mad by the many, many data returns they have to make on Excel spread sheets. It’s got particularly bad during COVID as all sorts of non-job departments want some information to crunch so they have something to do when they have got bored of Netflix. Hopefully, this debacle might change all of that but.

It’s 2020 the answer to any data question you ask is not Excel but PowerBI 😉

According to the BBC

The issue was caused by the way the agency brought together logs produced by the commercial firms paid to carry out swab tests for the virus.

They filed their results in the form of text-based lists, without issue.

PHE had set up an automatic process to pull this data together into Excel templates so that it could then be uploaded to a central system and made available to the NHS Test and Trace team as well as other government computer dashboards.

The problem is that the PHE developers picked an old file format to do this - known as XLS.

As a consequence, each template could handle only about 65,000 rows of data rather than the one million-plus rows that Excel is actually capable of.

And since each test result created several rows of data, in practice it meant that each template was limited to about 1,400 cases. When that total was reached, further cases were simply left off.

Yes but just imagine the moment where the penny dropped and the poor guy responsible realised. Oh to have been a fly on the wall. I mean, I once ‘accidentally’ took out a large part of of the mobile network of a European capital city for an hour because I thought I was typing in another window. How we laughed (later, much later)

If that bbc report is accurate, sounds like it was an old crappy system thats been there for ages. I can’t imagine anyone using excel in this way in the last 10 years. But who knows eh!

The problem is that the PHE developers picked an old file format to do this – known as XLS.

As a consequence, each template could handle only about 65,000 rows of data rather than the one million-plus rows that Excel is actually capable of.

That's interesting and means the Guardian were wrong, although in both scenarios the issue was the sheet maxing out on rows. In that sense, the Guardian was half right.

Of course, using the 32-bit version of excel was only half the problem because excel is very much a quick and dirty 'database' or intermediary between databases. Perhaps understandable given the rushed nature of the track and trace, but otoh Boris boasted about it being world-class?!

But a limit of say 10,000 rows would be a good artificial limit to force people to put data into a more appropriate system.

And that is in MS interest why? What are the chances of them going to sqlserver.

There is a good reason it went up from 65k rows to a million and a bit.

Serco provide testing at a few sites they don’t collate the

data, there’s numerous companies involved in the entire process.

If excel is the basis of a world class track and trace system then presumably Hornby are building hs2

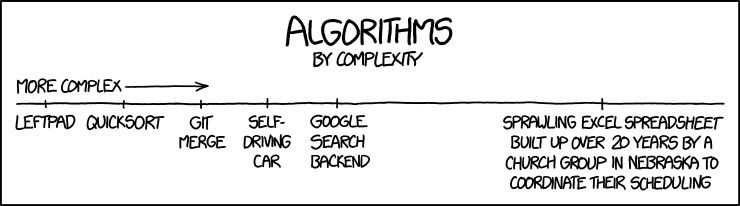

Essentially some-one has tried using a hammer on a screw. It's the wrong tool for the job. End of discussion.

Personally, I blame Tory voters.

Why be in the EU for consistent standards across several jurisdictions?

Let's **** our NHS instead...

To all those commenting about the ineptitude involved in using Excel for this, the start of this seems to be a CSV file which is not Excel, but a simple file format that was created before personal computers and Excel (1972 according to wiki).

Twisty seems close to the money shot with;

Involving manual processes like emailing around CSVs

Databases can import/export CSV files (as can Excel), so there is a good chance that Twisty has also got close to the bullseye with;

It wouldn’t surprise me if they were using an xls file which has a 65,536 row limit, rather than xlsx which has 1,048,576 limit mentioned in the Guardian.

EDIT - But I really like Pyros post 😀

To all those commenting about the ineptitude involved in using Excel for this,

CSV is a perfectly valid and robust data format that I use daily. And it doesn’t have a 65536 row limit.

As I said way up there the problem isn’t the tools, it’s the lack of understanding.

Most data people will have had that sinking feeling when you realise youve ****ed something up. I bet someone had a really bad Friday afternoon at PHE when they noticed that all the labs were reporting exactly the same number of test results...

As I said way up there the problem isn’t the tools, it’s the lack of understanding.

If you're using excel as a database, or intermediate/pseudo database, it isn't the best choice because it doesn't offer up much in the way of validation/safeguarding data. This is one reason they came unstuck. Systems entirely reliant on expert user inputs aren't a good choice for most applications. People are fallible.

Two pages in and no mention of PostgreSQL. Very disappointing.

Without wanting to defend the govt, and especially not wanting to offend fellow IT professionals, I suspect I know what happened. Some project manager somewhere will have asked 'What do we need to build to store all testing data for the whole country and provide real-time reporting capability which can be analysed by a multitude of agencies?'. The answer will have been a scalable cloud based data warehouse solution with real-time reporting, support for machine learning and statistical modelling, complex ETL and data QA processes and all the other bells and whistles. Then they'd have asked how long will it take (assuming money no object, which seems like a safe assumption)? The answer will have been at least 12 months to collect requirements, architect, build and test an alpha version. In the meantime some consultant with excel, python and possibly R will have knocked up a quick and dirty solution in a couple of days which answers the questions being asked by people in boardrooms who don't care about IT. Guess which one they went with?

MongoDB because the data is "big data" (complex and not very relational).

The issue with Excel was not a rows issue but a columns issue (each subject case entered as a rather then a row).

Regardless of whether they were using Excel to store primary data or not, that such a key piece of infrastructure is so flimsily constructed is shocking beyond believe. (See also RBS/Natwest offshoring support for 30 year old servers - then discovering nobody knew how the things worked. That's why there atm network goes titsup periodically).

The correct answer is:

1. Database (Google Cloud, Amazon AWS or Microsoft Azure - can be simple MySQL / S3), data streams or simple files > 2. Into ETL (e.g Talend / Microsoft / SAP / Fivetran) > 3. Data Warehouse / Lake (e.g Snowflake / Big Google BigQuery / Amazon RDS > 4. Visualisation / Analytics Layer (The bit the muppets should be using to view, extract and cut data instead of running pivot tables in Excel (e.g Looker / Tableu / Qlik).

3 months work at normal pace, with budget could be pulled off in one month.

Scalable system that can be used to model all things Covid until the end of time for around the same price the government paid for their Excel spreadsheet.

Every half decent tech co works like this. Any recent grad from a half decent comp sci course doing data at any half decent co would know.

One analyst call with any of the leading tech agencies would have pointed them this way.

Pay peanuts, get monkeys.

Oracle Life Science Database engine with a SAS analytics engine pulling data via SQL. R is for amateurs. You get what you pay for.